- Kubernetes installing in master-slave in multiple nodes

- Minikube running a locally single node

The first option will be used in real-time production environments, and the other is used for learning purposes. Here I've selected the second option for running WebLogic on Minikube.

Benefits of Kubernetes cluster

There are many benefits but in brief for WebLogic- Kubernetes is the core component in Cloud Native Engineering platforms

- It Will be extensively used in aws, azur and BMC (Spartacus)

- Ready to plug in real time web applications for easy deploy and scale

|

| Running WebLogic server on Minikube Kubernetes cluster |

In this article, I will be discussing

- How to prepare the VirtualBox for Minikube?

- Installation of Docker CE in Ubuntu

- Installation of Minikube in Ubuntu

- Deploy the minikube kubernetes dashboard

- Running WebLogic container in a pod

Prerequisites for Minikube in Ubuntu Virtual Box

Install the VirtualBox if it was not latest and try to launch the new Ubuntu virtual disk image. I preferred to use Ubuntu 16.04 Xenial or Ubuntu 17.10 Artful are best suites for this experiment. Select the virtual disk from uncompressed folder. Assigned the 4Gig Ram and 2 CPUs to the VirtualBox. Start the Ubuntu 16.04 VirtualBox image, after login osboxes user. Install the Guest Additions then try to configure shared folder where you wish to store the software that shares between host machine with a guest machine. Enable the shared clipboard -> bidirectional, and also drag and drop -> bidirectional.

1. Enter into a terminal use sudo -i to switch to the root user, update the apt package index with:

2. Install packages to allow apt to use a repository over HTTPS:

3. Add Docker’s official GPG key:

4. Verify that you now have the key with the fingerprint 9DC8 5822 9FC7 DD38 854A E2D8 8D81 803C 0EBF CD88, by searching for the last 8 characters of the fingerprint.

Use the following command to set up the stable repository. You always need the stable repository, even if you want to install builds from the edge or test repositories as well. To add the edge or test repository, add the word edge or test (or both) after the word stable in the commands below. 5. Adding repositories for the latest stable version

Note: Starting with Docker 17.06, stable releases are also pushed to the edge and test repositories. 6. Update the apt package index.

7.Install the latest version of Docker CE, or go to the next step to install a specific version:

8. login through root check below command if running or not:

apt-get update

2. Install packages to allow apt to use a repository over HTTPS:

apt-get install \

apt-transport-https \

ca-certificates \

curl \

software-properties-common

3. Add Docker’s official GPG key:

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -

4. Verify that you now have the key with the fingerprint 9DC8 5822 9FC7 DD38 854A E2D8 8D81 803C 0EBF CD88, by searching for the last 8 characters of the fingerprint.

apt-key fingerprint 0EBFCD88

|

| docker fingerprint validation in Ubuntu |

Use the following command to set up the stable repository. You always need the stable repository, even if you want to install builds from the edge or test repositories as well. To add the edge or test repository, add the word edge or test (or both) after the word stable in the commands below. 5. Adding repositories for the latest stable version

add-apt-repository \ "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) \ stable"

Note: Starting with Docker 17.06, stable releases are also pushed to the edge and test repositories. 6. Update the apt package index.

apt-get update

7.Install the latest version of Docker CE, or go to the next step to install a specific version:

apt-get install docker-ce

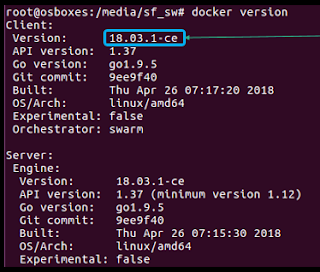

8. login through root check below command if running or not:

docker version

docker info

Install minikube

Minikube is recommended for development environments or to experience the Kubernetes in a quick way in a local VM/Laptop. Docker swarm is also a similar orchestration tool as Kubernetes. Kubernetes is open-source. Kubernetes is also supports non-docker containers such as rocket containers.1. Firstly, download minukube and kubectl

curl -Lo minikube https://storage.googleapis.com/minikube/releases/latest/minikube-linux-amd64 && chmod +x minikube && sudo mv minikube /usr/local/bin/

Now kubectl download and move to /usr/local/bin

curl -Lo kubectl https://storage.googleapis.com/kubernetes-release/release/$(curl -s https://storage.googleapis.com/kubernetes-release/release/stable.txt)/bin/linux/amd64/kubectl && chmod +x kubectl && cp kubectl /usr/local/bin

2. Set the MINIKUBE Env Variables

export MINIKUBE_WANTUPDATENOTIFICATION=false

export MINIKUBE_WANTREPORTERRORPROMPT=false

export MINIKUBE_HOME=$HOME

export CHANGE_MINIKUBE_NONE_USER=true

mkdir $HOME/.kube || true

touch $HOME/.kube/config

export KUBECONFIG=$HOME/.kube/config

3. Lets start the Minikube with the none driver there are multiple drivers available. Each driver works according the platform where your host machine running. So using for this experiment vm-driver=none is best suitable because it is a Virtualization of Containerization.

minikube start --vm-driver=none

We can use kubectl commands which are able to interact with Minikube cluster. If you get any issue related to certs. The fix will be simply stop the minikube and start it.

Understanding kubernetes Head Node

Minikube internally runs Kubernetes Head node or some other says that Master node, the whole control will be operated from this node only, which contains all components:

- etcd

- scheduler

- controller

- apiserver

- addons-manager

kubectl get po --all-namespaces

|

| Head node work with the set of namespaces |

Kubelet is a Kubernetes Node agent which will be always running and monitoring all the components. If anything goes down it will brings up that component service automatically.

The etcd is capable to store the key, values which are used in the Kubernetes cluster. It is developed and inspired from coreos.

Every Kubernetes master will have scheduler, that is actually used to triggered as per the start time value, by default it will be 0.

Kuberneretes master have controller which internally talks to docker environment and a kubelet. Kubelet is like running an agent for Kubernetes master.The internal communication will be using RSA certs.

The apiserver in the master will be serving the client requests and they will be routed to the available Nodes. A Node may have multiple pods of same category.

Think Differently

Using minikube dashboard to deploy WebLogic Console application where many thousands of examples are there to tell hello-world app but say think differently web application that is WebLogic administration console as app to deploy as service and name it as wls-app.Minikube Kubernetes will be providing us a nice UI dashboard to show the workload on the cluster. What all the status of pods, services, and also allows use to create and manage the deployments etc.

Let's start the dashboard

minikube dashboard

when you run the above command it will try to launch the browser and start the dashboard

|

| Minikube dashboard |

Finally stopping the minikube

minikube stop Stopping local Kubernetes cluster... Machine stopped.

Next post

Learning 2: MiniKube Kubernetes Cluster WebLogic Application deployment

Hoping you like this experiment trick. please share this with your techie friends, share your comments.